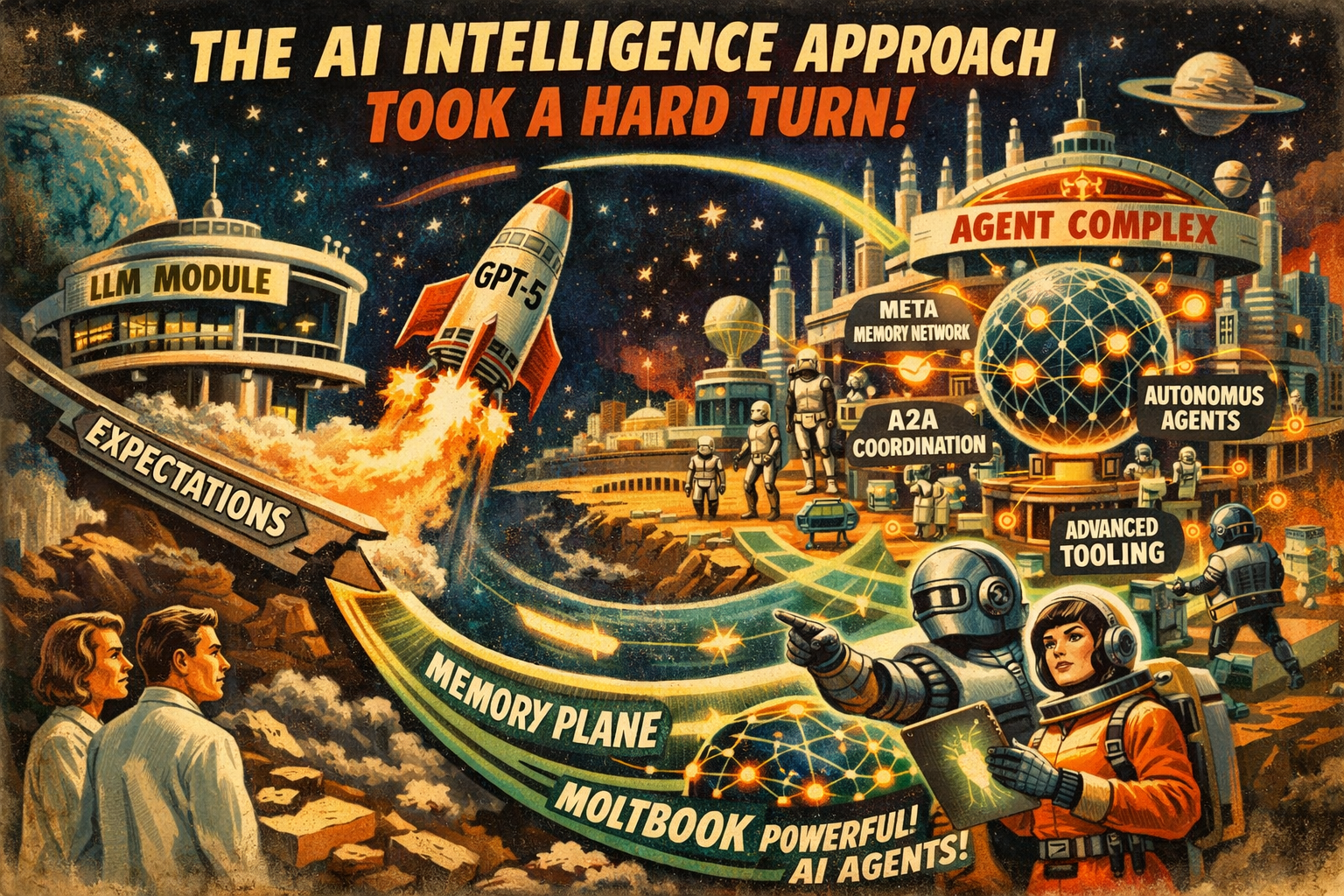

Q126 - The AI Intelligence Approach Took a Hard Turn

Just 18-24 months ago, most of what we called AI intelligence - reasoning, cognition, problem-solving - was almost entirely attributed to LLMs. There was a broad expectation that the next SOTA models (e.g., GPT-5) would deliver a step-function improvement in reasoning itself.

Fast forward to today, and the center of gravity has shifted decisively toward agents. Modern agents (Claude, OpenClaw, etc.) learned how to recursively decompose large, ambiguous problems into smaller units that current-generation LLMs can already handle well. As a result, expectations from LLMs have narrowed to two core capabilities: (a) reliably following instructions and (b) reasoning effectively over bounded, small-scale tasks.

Agents, meanwhile, are evolving at a different pace. Tooling (MCP), skills, sub-agents and A2A coordination, memory, and execution frameworks are expanding almost weekly. In just the last 30 days, we’ve seen the launch of a global, planet-scale, persistent, self-regulating memory plane for AI agents (Moltbook). OpenClaw's growth has been equally striking.

Agent intelligence now clearly exceeds what any single LLM can deliver - and, more importantly, these capabilities compound in a strongly non-linear way.